Welcome to our fascinating exploration of how the Pentagon is leveraging AI to streamline its employee vetting processes. This article delves into the intricacies of the Defense Counterintelligence and Security Agency’s (DCSA) adoption of AI tools, ensuring transparency and ethical use. Join us as we uncover the ‘mom test,’ the challenges of bias and privacy, and the future of AI in national security.

The Defense Counterintelligence and Security Agency, which grants security clearance from millions of American workers, is using AI to speed up its work. No ‘black boxes’ are allowed, its director says.

Imagine the iconic Pentagon building, not as it stands today, but as it might appear in a future heavily influenced by artificial intelligence. The structure’s familiar five-sided shape remains, but its façade is no longer stark concrete but a dynamic, pulsating web of data streams, each one a live feed of global information. These streams—comprising everything from news feeds to social media updates, satellite imagery to weather patterns—flow like electric currents, illuminating the building in a kaleidoscope of colors, each hue signifying a different data type. Surrounding the building, vast arrays of servers hum with AI algorithms, their complex computations visualized as translucent holograms floating in the air. These algorithms, powered by advanced machine learning, sift through the data streams, seeking patterns and anomalies that might indicate emerging threats.

The integration of AI into the security clearance process is symbolized by a continuous dance of light and data around the Pentagon. At the entrance, visitors are greeted by an AI interface, a smooth, futuristic screen displaying real-time clearance updates. As personnel approach, the interface scans their biometrics—facial recognition, retinal scan, voiceprint analysis—cross-referencing these data points with the vast datasets flowing around the building. Cleared personnel trigger a cascade of approvals through the system, visible as a ripple of green light racing up the data streams. Conversely, any irregularities cause the relevant data points to blink red, AI-driven protocols kicking in to investigate further. This seamless integration of AI and physical security embodies the future of defense: proactive, data-driven, and hyper-vigilant.

The ‘Mom Test’: A Common-Sense Check

The ‘Mom Test‘ is a straightforward, common-sense approach used by David Cattler, the Director of the Defense Counterintelligence and Security Agency (DCSA), to ensure the ethical and transparent use of AI in vetting employees. The test is remarkably simple: would you be comfortable explaining the AI’s decision-making process to your mom? This analogy serves as a quick ethical check, ensuring that the AI systems used in vetting processes are fair, understandable, and unbiased. It encourages designers and users of AI to step back and consider the broader implications of their algorithms, fostering a culture of responsibility and ethical awareness.

The Mom Test helps maintain public trust in several ways:

- It promotes transparency by encouraging explanations that are simple and accessible to anyone, not just AI experts.

- It fosters accountability by ensuring that the AI’s decisions can be clearly explained and justified.

- It builds confidence among the public that the AI systems used by the DCSA are designed with ethical considerations at the forefront.

In an era where AI is increasingly used in critical decision-making processes, such transparency and accountability are vital for public acceptance and support.

However, while the Mom Test is a useful benchmark, it is not without its criticisms:

- It may oversimplify complex ethical dilemmas, reducing them to a single, subjective question.

- It relies on the assumption that everyone has the same moral compass, which is not always the case.

- It could potentially hinder innovation by discouraging the exploration of complex AI models that might be beneficial but difficult to explain simply.

Despite these drawbacks, the Mom Test serves as a valuable first check in a multi-step ethical review process, ensuring that as AI continues to evolve, it does so with a strong ethical foundation.

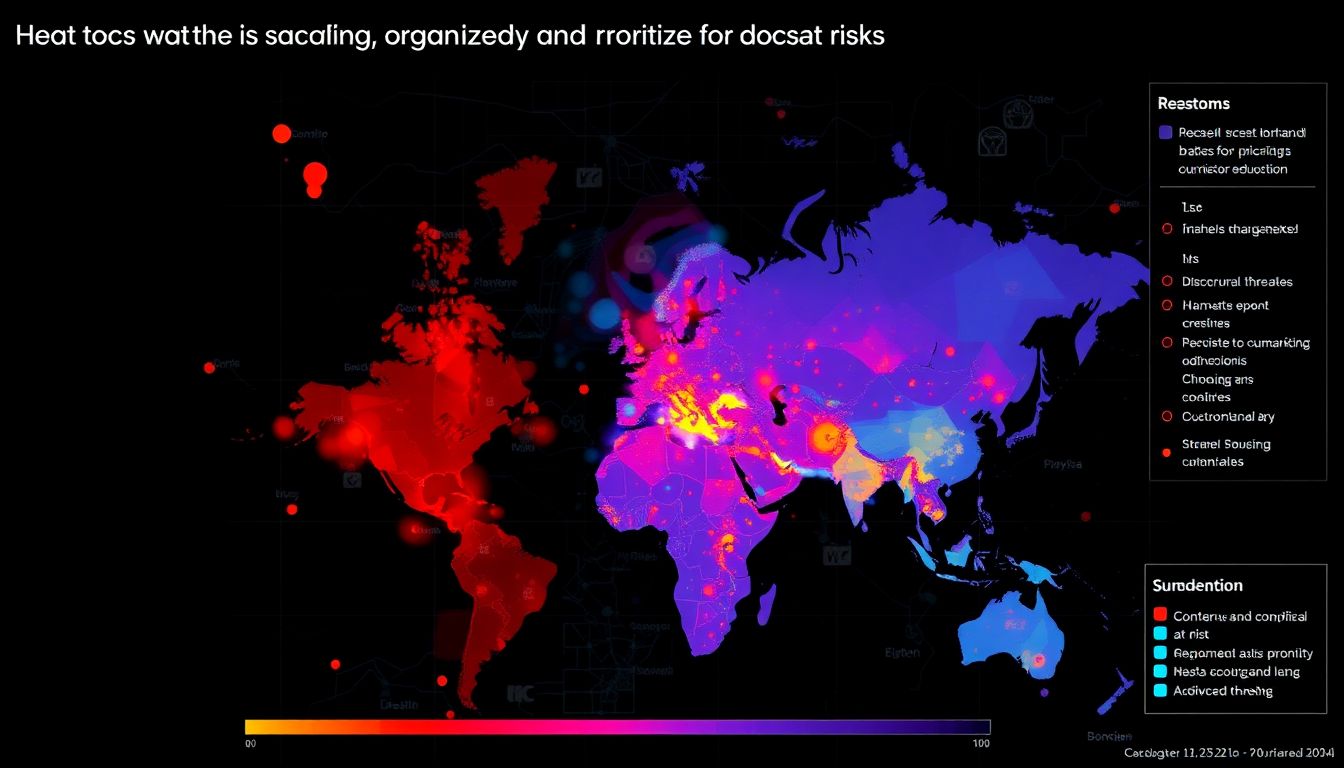

AI in Action: Organizing and Interpreting Data

The Defense Counterintelligence and Security Agency (DCSA) has been leveraging a suite of specific AI tools and methods to organize and interpret data, with a strong emphasis on transparency and avoiding ‘black boxes.’ Among the tools employed are Explainable AI (XAI) models, which are designed to provide clear insights into how conclusions are drawn from data. This transparency is crucial in high-stakes environments where understanding the rationale behind AI decisions is paramount. DCSA utilizes techniques such as SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-Agnostic Explanations) to interpret the outputs of complex machine learning models. These methods help in breaking down the contributions of each feature in the model’s decision-making process, ensuring that the AI’s reasoning is both understandable and accountable.

DCSA’s focus on transparency is further enhanced by their use of rule-based systems and decision trees, which provide a clear, logical flow of how data is processed and decisions are made. These tools are particularly effective in prioritizing threats, as they allow analysts to trace the path from raw data to actionable intelligence. By employing these interpretable models, DCSA can better communicate findings to stakeholders, fostering trust and collaboration. Additionally, DCSA implements Natural Language Processing (NLP) to extract and structure information from unstructured data sources, enabling more efficient data organization and interpretation. This approach not only speeds up the analytical process but also ensures that critical information is not overlooked.

While DCSA’s AI tools offer significant advantages, it’s important to acknowledge the challenges and potential drawbacks. The pursuit of transparency can sometimes come at the cost of model complexity and accuracy. Simpler, more interpretable models may not capture the nuances of complex datasets as effectively as more opaque, ‘black box’ models. However, DCSA navigates this trade-off by continuously refining their models and integrating feedback from human analysts. This iterative process helps in striking a balance between interpretability and performance. Moreover, the agency’s commitment to transparency fosters a culture of accountability, which is crucial in sensitive security contexts. By prioritizing explainability, DCSA not only enhances the efficiency of their data analysis but also builds a robust framework for threat prioritization and response.

Challenges and Concerns: Bias, Privacy, and Beyond

The integration of AI in security clearance processes, while promising enhanced efficiency and accuracy, presents several significant challenges and concerns. Chief among them is the issue of bias. AI algorithms learn from the data they are fed, and if this data is inherently biased, the outcomes will inevitably reflect those biases. This could lead to unfair advantages or disadvantages for certain groups, potentially discriminating against individuals based on race, gender, or other protected characteristics. Furthermore, the privacy implications of AI in security clearance processes cannot be overlooked. Sensitive personal information is at risk of exposure or misuse, especially given the vast amount of data that AI systems can process and analyze.

Another critical concern is data security. Security clearance processes involve handling and storing highly sensitive information, making them attractive targets for cyber threats. Any vulnerability in the AI system could be exploited, leading to data breaches with severe consequences. Additionally, there are apprehensions about the transparency and explainability of AI decisions. The ‘black box’ nature of many AI algorithms makes it difficult to understand how decisions are reached, which is problematic in high-stakes contexts like security clearance, where accountability is paramount.

To address these challenges and ensure fairness and compliance, the Defense Counterintelligence and Security Agency (DCSA) has implemented several measures. These include:

- Establishing clear guidelines for data collection, storage, and usage to mitigate privacy risks and ensure data security.

- Implementing rigorous testing and validation processes to identify and address biases in AI algorithms.

- Promoting transparency by using explainable AI models and providing clear explanations for decisions made.

- Regularly reviewing and updating AI systems to keep up with evolving best practices and regulatory requirements.

- Encouraging ongoing dialogue with stakeholders, including the public, to address concerns and foster trust in the use of AI for security clearance processes.

FAQ

What is the ‘mom test’ and why is it important?

How does DCSA use AI to speed up its work?

What are the potential challenges of using AI in security clearance processes?

- Bias in AI algorithms

- Privacy concerns

- Data security risks

- Misidentification of individuals

DCSA addresses these challenges through oversight, transparency, and compliance measures.

How does DCSA ensure the ethical use of AI?

- Avoiding ‘black boxes’ and understanding how AI tools work

- Proving the credibility and objectivity of AI tools

- Implementing oversight from the White House, Congress, and other administrative bodies

- Adapting to changing societal values and biases