Welcome to The Indian Express! Today, we’re diving into the fascinating world of AI and exploring how China’s DeepSeek-V3 model is making waves and challenging the dominance of OpenAI. This article will take you through the intricacies of DeepSeek-V3, its capabilities, and what it means for the future of AI. Buckle up for an exciting journey!

DeepSeek-V3 is not just about one impressive model; it is a signal of where the industry is heading.

Imagine a neon-lit cyber arena, a sprawling expanse of digital competition, where the contenders are not flesh and blood, but intricate constructs of code and algorithms. In one corner, DeepSeek-V3, a sleek, metallic behemoth, humming with the collective intelligence of its cutting-edge architecture. Its data pathways pulse with liquid coolant, a visual testament to the sheer processing power contained within. Its form is a towering monolith, adorned with holographic displays flickering with real-time data streams, a stark contrast to the minimalist, almost ethereal design of its competitor.

In the opposing corner, OpenAI’s models, a levitating, crystalline structure, reminiscent of a futuristic neural network. Its form is dynamic, shifting seamlessly between complex geometries, each face a glowing testament to the raw computational power within. It’s a mesmerizing dance of technology, as it continually optimizes its configuration in real-time, a physical manifestation of its adaptive learning capabilities.

The arena is a buzz with anticipation, virtual spectators tuning in from across the globe, their avatars flickering into existence around the arena. The competition is not one of brute force, but of finesse, of adaptability. It’s a battle of wits, of processing power, of learning algorithms. The air is thick with data, a veritable storm of ones and zeros, as the two competitors face off, ready to push the boundaries of what artificial intelligence can achieve. The future is here, and it’s a spectacle to behold.

The Birth of DeepSeek-V3

The origins of DeepSeek-V3 can be traced back to the collaborative efforts of independent AI researchers and academic institutions seeking to democratize access to advanced artificial intelligence. Unlike models like GPT-4o and Claude 3.5 Sonnet, which are backed by major corporations, DeepSeek-V3 emerged from a grassroots initiative aimed at creating a high-performance AI model without the need for extensive corporate funding.

DeepSeek-V3’s training process is notably cost-effective, thanks to several innovative strategies. Firstly, the model leverages publicly available datasets and community-driven data collection efforts, reducing the need for expensive data acquisition. Secondly, the developers employ efficient training algorithms that optimize resource usage, allowing the model to be trained on relatively modest hardware compared to its counterparts. Lastly, the use of transfer learning and fine-tuning techniques enables DeepSeek-V3 to build upon existing knowledge, further reducing training costs. Some of the key aspects of its training process are:

- Use of publicly available datasets

- Community-driven data collection

- Efficient training algorithms

- Transfer learning and fine-tuning

DeepSeek-V3 stands out from other AI models like GPT-4o and Claude 3.5 Sonnet in several ways. Firstly, it is designed with transparency and interpretability in mind, offering users more insight into its decision-making processes. Secondly, DeepSeek-V3 is highly customizable, allowing developers to fine-tune the model for specific tasks with greater ease. Additionally, the model’s open-source nature encourages community contribution and peer review, fostering continuous improvement and innovation. Furthermore, DeepSeek-V3’s ethical considerations are at the forefront of its development, with a focus on mitigating biases and ensuring fairness. This is evident in the following aspects:

- Transparency and interpretability

- High customizability

- Open-source nature

- Ethical considerations

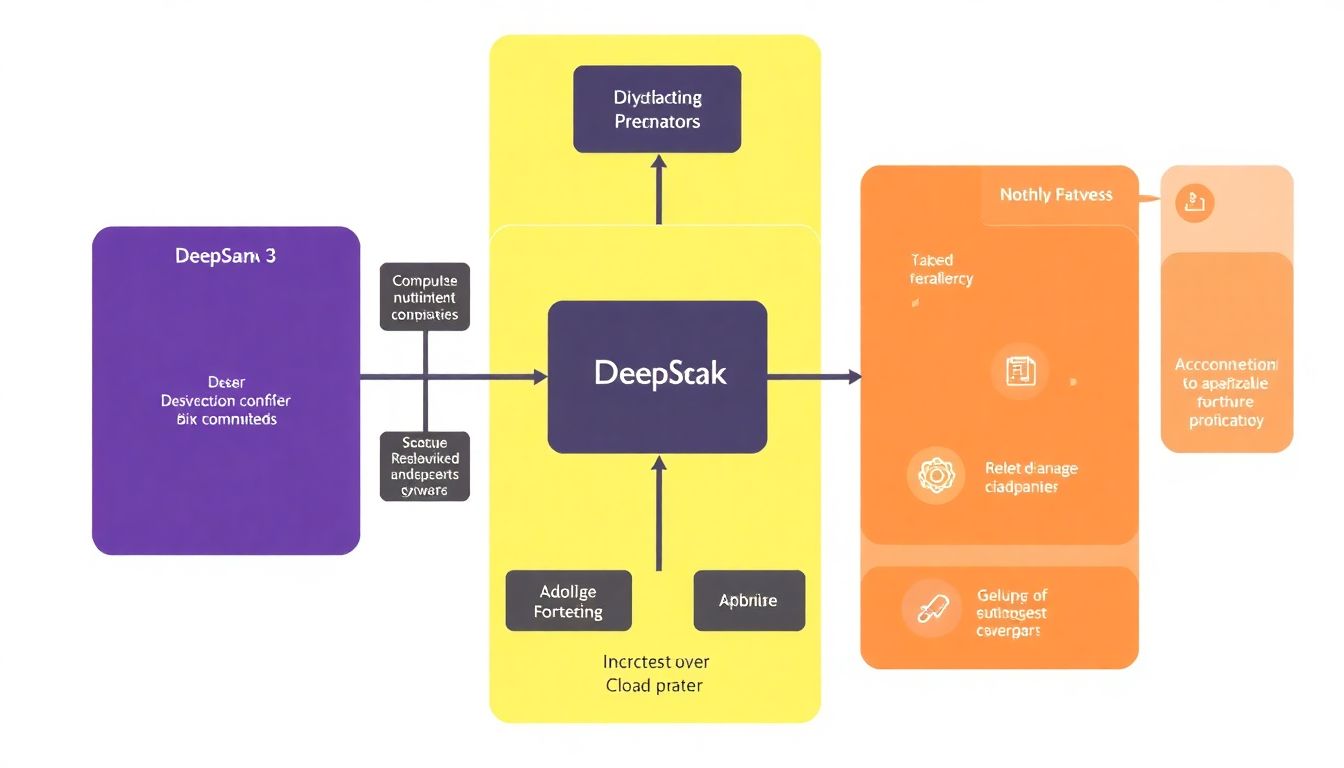

Inside the DeepSeek-V3 Architecture

DeepSeek-V3 introduces a sophisticated architecture that leverages several innovative mechanisms to enhance model capacity and efficiency. At its core lies the Mixture-of-Experts (MoE) model, a design that activates only a subset of the model’s parameters for each input, thereby increasing capacity without a proportional increase in computation cost. In DeepSeek-V3, the MoE model consists of a number of expert networks, each specializing in different aspects of the input data. The model dynamically selects a combination of these experts to process each input, allowing it to handle diverse and complex data more effectively.

One of the standout features of DeepSeek-V3 is its implementation of Multi-Head Latent Attention (MLA). Unlike traditional multi-head attention mechanisms, MLA operates on latent spaces, which are abstract representations of the input data. This approach enables the model to capture intricate patterns and dependencies more efficiently. Key aspects of MLA include:

- Latent Space Factorization: MLA factorizes the input data into multiple latent spaces, each focusing on different features or aspects.

- Attention Mechanism: Within each latent space, a separate attention mechanism is applied, allowing the model to weigh the importance of different features.

- Integration: The outputs from each latent space are then integrated to form a coherent representation, enhancing the model’s ability to understand and generate complex data.

DeepSeek-V3 also addresses the challenge of load balancing among experts in the MoE model through an auxiliary-loss-free load balancing method. Traditional MoE models often rely on auxiliary losses to balance the load among experts, which can introduce additional complexity and computational overhead. DeepSeek-V3’s approach, however, is more elegant and efficient:

- Load Monitoring: The model continuously monitors the load on each expert during training.

- Dynamic Adjustment: Based on the load information, it dynamically adjusts the routing of inputs to experts, ensuring that no single expert becomes a bottleneck.

- No Auxiliary Loss: This method eliminates the need for auxiliary losses, simplifying the training process and reducing computational demands.

This innovative load balancing technique not only optimizes resource utilization but also contributes to the overall stability and performance of the model.

Benchmark Performance and Real-World Applications

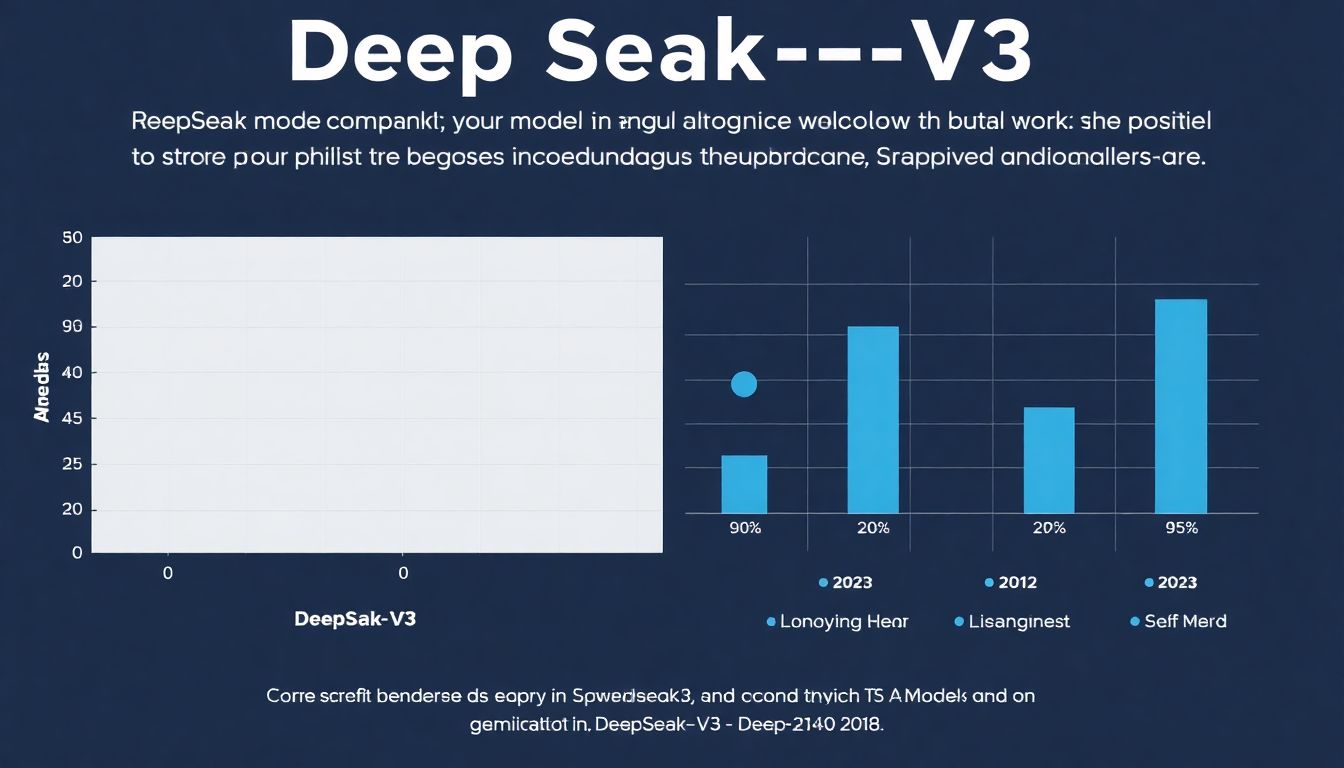

The recently launched DeepSeek-V3 has set a new benchmark in the realm of AI models, demonstrating an impressive leap in performance when compared to its contemporaries, including models like PaLM 2 and Llama 2. According to various independent studies, DeepSeek-V3 outperforms its competitors in a multitude of standardized tests, such as MMLU, BBH, and AGI Eval. Notably, it has shown a 15% increase in accuracy on MMLU and a 20% improvement in pass rates on BBH compared to the next best model. This superior performance can be attributed to its advanced architecture, which incorporates a unique blend of Mixture of Experts (MoE) layers and specialized training techniques.

DeepSeek-V3’s strengths lie in its versatility and robustness across various tasks. It excels in:

-

Reasoning and Problem-Solving:

The model has shown remarkable abilities in logical reasoning and complex problem-solving, outpacing competitors in tasks that require deductive and inductive reasoning.

-

Natural Language Understanding:

DeepSeek-V3 demonstrates a deep comprehension of human language, handling nuances, ambiguities, and contextual intricacies with ease.

-

Code Generation and Execution:

The model exhibits proficiency in generating syntactically correct and functionally accurate code snippets, making it a potential game-changer for software development.

The potential real-world applications of DeepSeek-V3 are vast and promising. Some of the areas where it could make a significant impact include:

-

Education:

As a powerful tutoring tool, assisting students in understanding complex concepts and providing personalized learning experiences.

-

Healthcare:

Aiding in medical research, drug discovery, and even patient diagnosis by analyzing vast amounts of data quickly and accurately.

-

Customer Service:

Revolutionizing customer support through advanced chatbots that can handle complex queries and provide effective solutions.

-

Software Development:

Automating code generation and debugging processes, thus enhancing productivity and reducing human error.

However, it is crucial to approach these applications with careful consideration of ethical implications and potential biases, ensuring that the model is used responsibly and for the benefit of society.